Engage with this AI Powered Article

it can generate personalised TLDRs, explain content, let you build graphs and more!

Get better at Prompting LLMs

23 minutes

Nov 19, 2024

This blog post was written as a supporting article for a talk that was done at Google DevFest 2024 Brunei.

↳ AI helper

Hi, my name is Albert. For those of you that don't know me, I didn't start out in the tech industry. I was a Process Safety Engineer in the Petrochemical Industry for 7 years before I transitioned into writing code. Since 2017, I've been dabbling into web development and when ChatGPT was released in Nov 2022, I was intrigued by the possibilities and started introducing AI into my day-to-day and also started building more applications that promote the use of AI or has used AI to improve an existing solution.

Looking back, building web applications back in 2017 to 2022 was really different. Notable projects that I've been involved in are:

- Manamakan - Brunei's Food & Beverage Discovery Platform

- Mirath - An Online Platform to donate Set Perlengkapan Jenazah to the needy

- Sukarelawan Belia - An Online Volunteering Management System for COVID-19

It required a lot of manual work and the applications were not as smart as they are today. Refer to my post here which goes into more detail about how LLMs have transformed my life.

Since the advent of LLMs, I've been able to build applications that were previously not possible. I introduced the first ever Online Malay Version (not just a PDF) of the Hansard of the Legislative Council of Brunei in 2023, and that's not all, I used AI to translate the Hansard into English.

During my time at Greywing, I built an AI that handled email communication between businesses and port agents to handle the initial procurement process. We also built AI that allows you to talk directly to your Data Warehouse instead of having to write SQL queries.

And now, I've created Brunei's first ever Search Engine that gives you answers and content only from this country, unpolluted by the rest of the world. Because it's localised you can easily look up specific things- like camping gear or facial products that's sold here.

In these 2 years, I've learned a lot about LLMs and how to maximise their potential in the problems I'm tackling. Prompting, is a fancy way of saying that you are giving instructions to an AI. Sure, we can talk and instruct it like how we talk to our human peers, but it won't be as effective as LLMs aren't exactly human and they have their own "language".

Prompting = Instructing AI

↳ AI helper

Don't worry, it's not like we need to learn a new programming language, but rather it's about learning to communicate to a machine in a way that it will give you the results you want.

Here's a very simple visual example to set the stage.

When I first started generating images with Dall-E, I quickly learnt that I was lacking in visual vocabulary. I would go generate me an image of "an otter". Sure the image came out pretty good, but it wasn't wow.

caption: An otter by the stream

caption: An otter by the streamI eventually learnt that in the world of image generation, I needed to learn things like visual styles, composition, camera lens terms, like wide-angle, telephone, and styles of prominent artists like Picasso, Van Gogh, and so on.

Here's a better prompt, "an otter that is suitable to be featured in a national geographic magazine"

caption: An otter posing

caption: An otter posing caption: An otter mid-dive

caption: An otter mid-diveAn you can instantly see the difference, the images are now more lively and engaging than the original.

More words, more context in the prompts we provide will, in most cases give us better results.

Now I want to quickly tell you more about prompting, but let's first do a quick dive into LLMs.

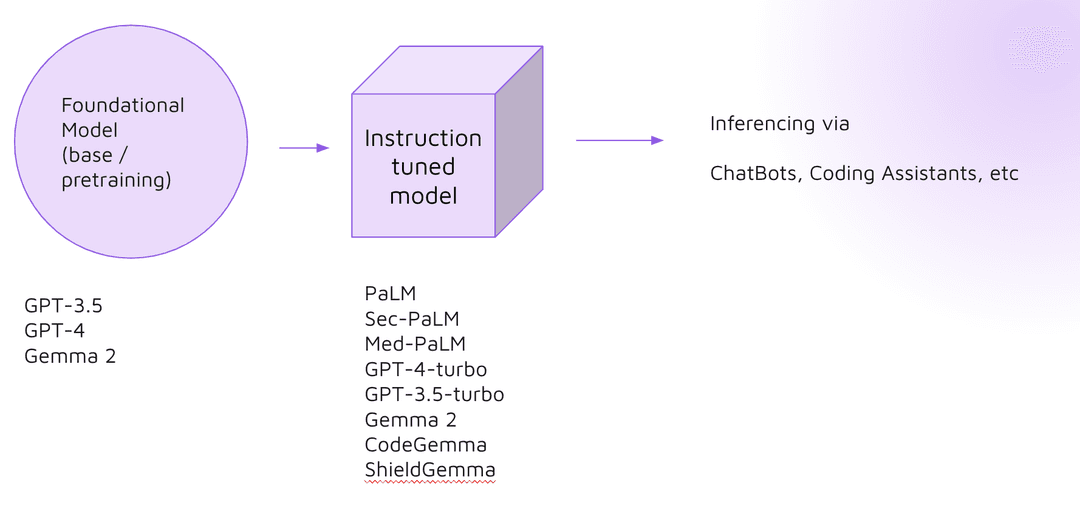

caption: Foundational Models

caption: Foundational ModelsWe've all heard of the term Large Language Models (LLMs) and some of you might have also heard about Foundational Models. Usually in our conversations, the LLMs we are actually referring to like GPT-3, GPT-4, Gemini, the ones that we (regular people) work with are actually Instruction-tuned models. The corresponding companies start train Foundational Models, then they fine-tune them for specific tasks.

It is referred to as Instruction-tuned models as they are built for the purpose of following instructions and more specifically, in a chat-like conversation. Again depending on the goal, they can also be fine-tuned for more specific tasks like with Gemma 2, that also comes in the flavour of CodeGemma that is fine-tuned to generate code and ShieldGemma that is fine-tuned to evaluate the safety of the input prompt and output responses against a set of defined safety policies.

With these instruction-tuned models, we can now install it onto machines with sufficient RAM or GPU and start generating text, code, images. We call this inferencing.

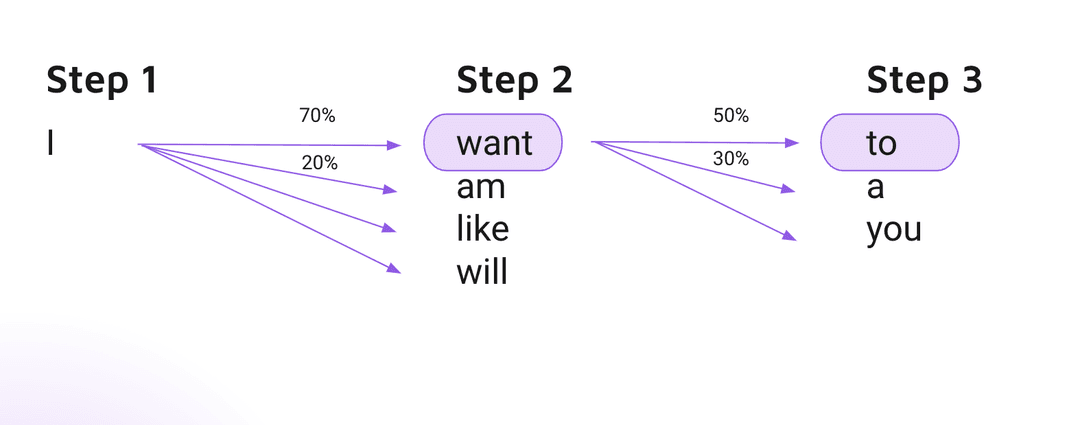

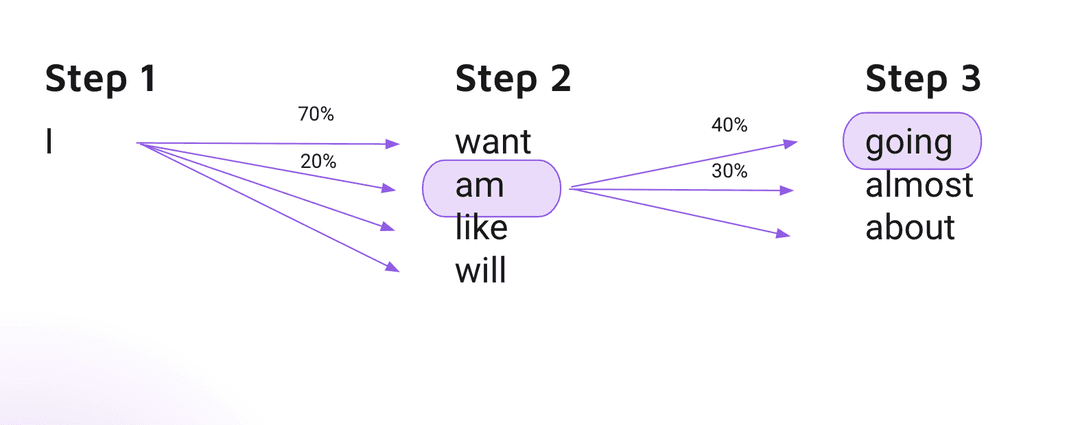

So how does inferencing work? It's actually quite simple, the model will predict 1 token at a time, but it will also use all of the preceeding tokens to predict the next token. At every step, there is a probability of occurence for each token in the vocabulary, and depending on the setting you provide, it could only choose the most probable token or it could choose a random token within a certain threshold.

caption: Inference option 1

caption: Inference option 1 caption: Inference option 2

caption: Inference option 2The LLM will predict the next token based on ALL of the previous tokens provided and also those that are generated while answering.

If I am to draw a parallel of this with us Humans, the LLM model is akin to our Brain. The prompts that we write for the model, (can come in the form of the task, context or persona) is akin to our history, environment that we grew up in, our personality and intentions.

caption: Models vs Humans

caption: Models vs HumansThe combination of this gives rise to the prediction of tokens (LLM) or words (Humans).

Now how many of you in the audience can relate to this? Do you think that you know exactly what you are saying in advance, or does the next word only come to you as you are speaking?

Ok, let's finally get into improving our prompts.

Here's a really useful resource that have helped me, and will surely help you too.

These are the 6 areas that you should pay attention to when writing your next prompt.

- Task: What do you want the model to do?

- Context: What information does the model need to know to do the task?

- Examples: What are some examples of the output you want?

- Persona: Who is the model speaking as?

- Format: How should the output be formatted? Email, Markdown, Code, etc

- Tone: What is the tone/vocal style of the output?

You do not need to provide all 6 in every prompt. However, providing more will tend to give you better results. It is completely normal to start without any of these, especially when you might not exactly know what you want yet at the start. As you observe the outputs you will naturally start to distil your own thoughts and preferences, and know what to include into the prompts.

In my opinion, the two most important areas are Task and Context. The Task is the instruction that you want the model to do, and the Context is the information that the model needs to know to carry out the task.

Let's go through this using an example topic - Digital Citizenship.

The task is the core instruction or objective you want the model to fulfill. Instead, provide concrete parameters around the expected output, such as length, format, tone, or level of detail.

Additionally, consider structuring the task prompt as a complete sentence or instruction, rather than a simple phrase. This can help the model better understand the context and intent behind the prompt.

What is digital citizenship?

Explain in 100 words or less, the term digital citizenship.

Tell me about digital citizenship.

Identify the top 3 key areas of digital citizenship that I should be aware of.

Loading...

Loading...

In the improved prompt, we have specified the length of the response and also to only limit the explanation to the top 3 key areas. This will help the model to focus on the most important aspects of digital citizenship.

The context refers to the additional information the model needs to understand in order to effectively complete the task. This could include background details, relevant constraints, or specific requirements.

The more specific and grounded in reality the context, the more meaningful and applicable the model's response will be. Avoid generic or vague context that leaves the model guessing.

Now let's add some context to the prompt.

Identify the top 3 key areas of digital citizenship that I should be aware of.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of.

Loading...

By adding this contextual information, you give the model a clear understanding of the target audience, the objective, and the specific areas to focus on. This will result in a more tailored and actionable response.

Providing relevant examples within your prompts can be a powerful technique to guide the model and shape its output. Examples serve two key purposes:

- Illustrate the expected format, structure, or style of the desired output.

- Offer sample content that the model can use as a reference point.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of. Here is an example of a suitable output: Programming language Python: Python is one of the most commonly used coding languages to work with AI. Application: Start and organize classes on using AI to fine-tune models.

Loading...

In the improved prompt, we are guiding the model to not just provide a list of the key areas, but also indicate how we can "apply" it into our roles as a social media marketer.

Think of the persona as the character or roel of the person that you wish you were talking with to achieve the task at hand.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of.

You are an Interview Coach with 20 years of experience in helping individuals market themselves and proactively get themselves ready for their next job application. I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of.

Loading...

In the improved prompt, I have specified the persona of the person that I wish I was talking directly with, if I am to receive the following advise.

I have previously produced a working example that illustrates the persona of an asian uncle that always scolds or comments on you. Check it out here

Clearly specifying the desired output format can help the model structure its response appropriately. This could include details like:

- Content structure (paragraphs, bullet points, etc.)

- Markup (Markdown, HTML, JSON)

- Code syntax (Python, JavaScript)

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of. Provide your answer in a bullet list with the format: [topic] [description] [methods to apply]

Loading...

Here I have specified that the format should be in bullets, but also provided a structure for the bullets. This is quite similar to the "example" section, but here we are being more generic.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of.

I am going for an interview with a company in Brunei that is looking to hire a social media marketing person to promote and educate their audience on digital citizenship. Identify the top 3 key areas of digital citizenship that I should be aware of. Be as strict and sarcastic as possible, otherwise I won’t take you seriously.

Loading...

Here, to facilitate my own learning process, I prefer when someone is strict and sarcastic with me. If they are too nice, I might not take their advice seriously. So I have specified the tone that I want the model to use.

Based on the given text, grade the degree of digital citizenship

{{input}}Assign a grade to the given text based on the level of digital citizenship.

Beginner: Struggles to identify credible sources, sharing unverified content

Intermediate: Can differentiate between credible and unreliable sources, uses basic digital tools effectively.

Advanced: Actively evaluates sources, cross-checks information, promotes digital literacy among peers

{{input}}Loading...

Here I have specified the grading criteria for the model to follow. This will help the model to understand the different levels of digital citizenship and provide a more consistent response.

Assign a grade to the given text based on the level of digital citizenship.

Beginner: Struggles to identify credible sources, sharing unverified content

Intermediate: Can differentiate between credible and unreliable sources, uses basic digital tools effectively.

Advanced: Actively evaluates sources, cross-checks information, promotes digital literacy among peers

{{input}}Assign a grade to the given text based on the level of digital citizenship.

...

You will be provided with text obtained from the social media profile of an instagram account, including their posts and it’s accompanying comments.

{{input}}Loading...

Here I have added context to the prompt by specifying that the text will be obtained from a social media profile of an instagram account. This will help the model to understand the source of the text and the type of text to expect.

Assign a grade to the given text based on the level of digital citizenship.

…

You will be provided with text obtained from the social media profile of an instagram account, including their posts and it’s accompanying comments.

{{input}}Assign a grade to the given text based on the level of digital citizenship.

…

Example Input:

Post Caption: Lorem ipsum dolor sit amet …

Example Output:

```json

{

"extractedText": "..."

"grade": “beginner”

}

```

{{input}}

Loading...

Here I have explicitly provided an example of what the input might be and what the expected output should look like. This will help the model to understand the structure of the input and the expected output.

Assign a grade to the given text based on the level of digital citizenship.

...

You will be provided with text obtained from the social media profile of an instagram account, including their posts and it’s accompanying comments.

{{input}}Assign a grade to the given text based on the level of digital citizenship.

…

Provide your response in JSON according to the following Typescript format:

```typescript

type GradedText = {

extractedText: string // this is the excerpt obtained from the post

grade: ‘beginner’ | ‘intermediate’ | ‘advanced’

}

```

{{input}}

Loading...

Here I have specified the output format as a typescript object, which will help the model further produce the output in the desired format.

Encouraging the model to explain its reasoning before providing the final answer can usually lead to more accurate and detailed responses.

This is one of the most useful prompts to include

think step by step.

↳ AI helper

Ambiguity is the enemy of effective prompts. Be as clear and specific as possible.

Statements like, make it good, better, best are subjective and can lead to a wide range of outputs. Instead you have to specify what you mean by good, better, best.

If you can't elaborate, you can always get the LLM to generate examples for you, just as I have done in this article.

be clear and concise.

↳ AI helper

One of the ways to prevent or limit hallucinations, is actually to include into the prompt that the model can answer with "don't know" or simply return "null" or equivalent.

Otherwise, the model will naturally be overconfident and always give you an answer.

Most of the prominent models today have multi-modal capability, i.e. the ability to accept images as an input. This can be a powerful tool to help articulate the problem, especially when you are not sure how to describe it in words.

This is especially useful when you are coding, and you've encountered a bug that transcends your immediate code, but could be due to the program, software and environment that you are using. Simply take a screenshot of everything and ask the model to help you debug. (But be cautious if you are working with sensitive work related data!)

Mastering prompting takes practice and experimentation. Try different approaches and refine your prompts based on the results.

Focus on crafting clear, specific prompts that align with your goals. Leverage visuals when helpful. Approach prompting with curiosity and an iterative mindset.

Keep honing your prompting skills - they'll serve you well across many applications. Refer to the resources below or reach out to me if you need further assistance.

Here are some resources that you might find useful that has helped me to produce this article.